When examining reasons why cyber risk is ‘dynamic’, it becomes clear a different risk approach is needed

Trillions of dollars spent every year on digital transformation has resulted in large corporate IT networks becoming ever more complex. Meanwhile, attackers in pursuit of financial gain are constantly evolving tactics, techniques, and procedures to get around security controls to gain access to these networks and steal data.

Cyber is human behavior risk.

Humans are dynamic and so is cyber risk.

Controls that worked on attackers yesterday may not work today. Despite increased risks, digital transformation will remain a business imperative. But companies are losing visibility of their own IT environments and conventional approaches to managing the risk are proving insufficient.

Something that has been core to Intangic’s approach to cyber from the beginning is that it is a dynamic risk. Some people in the insurance industry are now coming around to this view. But it is important to examine what makes it so, and, more importantly, what it means for risk and security teams in need of better ways to manage, mitigate and transfer the risk. Though underwriting results for insurers have no doubt improved since 2020, the growing cyber coverage gap and frequency of large uninsured losses is proof that the outcomes for too many risk managers have not.

Our job is to help risk and security teams avoid losses, determine their risk appetite, and achieve better financial outcomes.

When we set out to tackle the problem risk managers are facing, the fundamental realities of digital transformation and threat actors required us to take a different approach to assessing and modelling the risk if we are to deliver on this mission.

Growing Network Complexity

Companies globally are investing trillions of dollars per year in digital transformation. As a result, IT networks for large organizations are more complex than ever and are only becoming more so. According to a 2023 survey from Splunk (acquired earlier this year by Cisco for $28 billion), organizations report operating and maintaining, on average, 165 internally developed business applications, half of which are in the public cloud (51%) and half on-premises (49%).

A number ‘incomprehensibly large’

The best way to quantify the growing complexity of IT networks is to look at the number of IP addresses attached to them. Internet protocol version 4 (IPv4) is part of the backbone of the internet. This protocol has capacity for approximately 4.3 billion IP addresses, but it is no longer sufficient to meet the growing demand for connected devices, driven by digital transformation and the proliferation of IoT devices. The transition to IPv6 brings nearly unlimited address space (340 undecillion!), that is 340 followed by 36 zeros, a number almost incomprehensibly large. This provides scalability to handle the surge in the size and complexity of networks today, especially large corporate networks.

Then it becomes a question of how well security teams can maintain a good line of sight across these growing networks. No more than 52% of the time did organizations say they have “excellent visibility” into each component of their environment, like on-prem legacy infrastructure, private cloud infrastructure, and security posture. In the physical world, this level of visibility would be considered poor if one were driving a vehicle. And we know that low visibility heightens the likelihood of accidents.

It should come to no one’s surprise that cyberattacks are a significant cause of IT downtime for large organizations, contributing to around 20-25% of such incidents. More on the economic cost of these events for businesses below.

To keep eyes on these constantly changing networks, most companies (66%) used AI/Machine Learning tools to do so long before the arrival of the AI frenzy of the past 18 months. New threat detection and observability technologies are helping, but not nearly well enough. According to Google Cloud, over the course of 2023, organizations were notified of a ransomware incident by an external source 70% of the time. In these cases, it was the attacker ransom note tipping the company off 76% of the time (vs. 24% from external partners via external detection and response (EDR) technologies).

Taking a step back, this represents the tension between the upside and downside of digital transformation. This is a quote from Clorox CEO Linda Rendle during an earnings call in 2022, about one year prior to the company’s large business interruption (BI) event in 2023:

“….we believe very strongly that we can be more simple and fast, and that will help support that 3% to 5% growth rate that we have and restoring margins. We want to cut down on decision time. We want to ensure that the technology we put in place through our digital transformation is supported by a structure that enables it and uses it as fast as we possibly can to ensure that we’re closer to the customer and closer to the consumer.”

In short, large companies have no choice but to continue to invest in digital transformation, thus expanding their already dynamic networks. But the downside risks being created are growing in complexity and frequency and security and risk teams are challenged by how to adequately manage them (as Clorox discovered in the costly 2023 breach).

Growing frequency of attacks

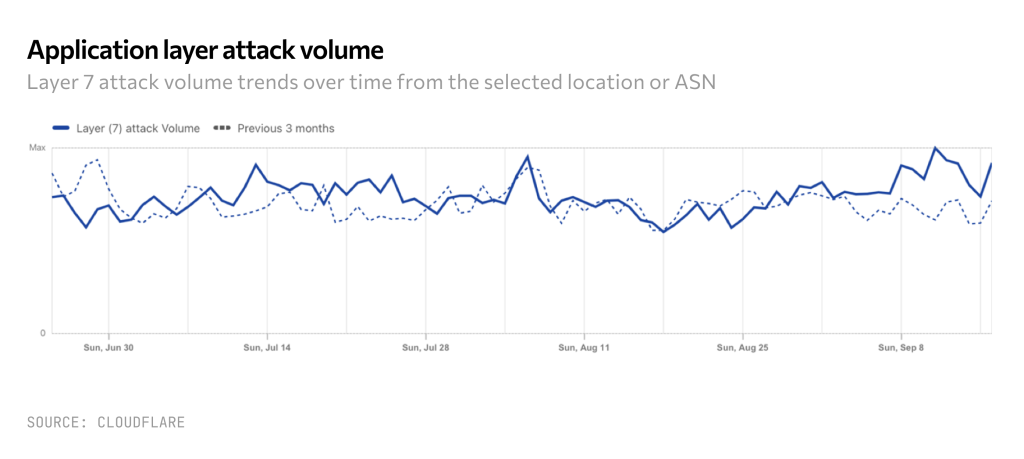

According to Cloudflare, large organizations face billions of cyber attacks every day. Note in the graph below that attack volume is constant even though the type of attacks are changing.

We talked previously about why companies can no longer rely on strong controls because successful breaches increasingly involve the circumvention of controls altogether. This is an example of how cyber is a behavioral risk and as a result, it is dynamic. Controls that worked on attackers yesterday may not work today.

The recent Cisco Talos Incident Response Report found that MFA circumvention was an issue in around 50% of all security incidents Cisco Talos dealt with in Q1 of 2024. Plenty of high-profile breaches like MGM and Caesars illustrated the limitations of controls in the face of attackers increasingly adept at working around them and utilizing them to their advantage.

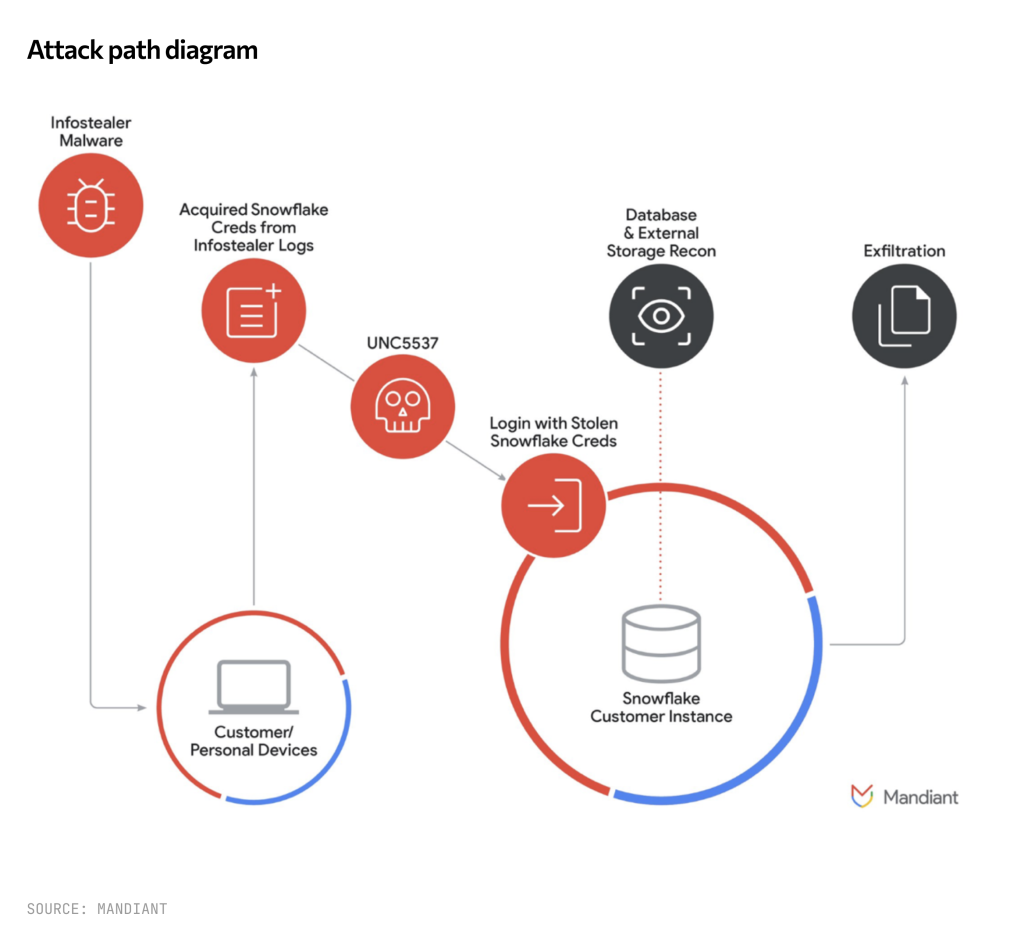

A recent Mandiant research note on the Snowflake attacks earlier this year also points to the changing tactics of attackers, including credential theft. “Mandiant’s investigation has not found any evidence to suggest that unauthorized access to Snowflake customer accounts stemmed from a breach of Snowflake’s enterprise environment. Instead, every incident Mandiant responded to associated with this campaign was traced back to compromised customer credentials.”

Economic cost of downtime justifies investment in security

We also recently outlined the growing severity of economic losses from cyber incidents. The reason is simple: when downtime occurs on networks (i.e. when technology and digital systems don’t work as intended), the economic impact is real.

Here is what companies themselves report:

– 45% report loss of revenue

– 38% report loss of customers

Downtime costs large organizations an average of $200 million annually. (Again, 20-25% of downtime events are caused by cyberattacks)

CISOs and CFOs understand the business imperative of investing in security as Global 2000 companies are spending an average of $23.8 million on cybersecurity tools and $19.5 million on observability tools. Gartner and McKinsey project between 13-14% growth in the global cyber market in 2024 on top of the over $200 billion already being spent, so companies are continuing to invest heavily in protection.

Security is improving. Though as the threat detection figures illustrate, teams are challenged to keep pace with the speed and scale of digital transformation. What is lacking are better options for assessing the risk and structuring programs to deal with it.

When looking at the facts, the need for a different approach is clear

The defining features of what makes cyber such a dynamic risk are clear:

- Constantly changing, expanding corporate networks where security teams have limited visibility into their own networks and

- Human attackers that are constantly changing tactics, techniques and procedures to circumvent controls designed to block them from gaining access to networks and data.

This reality does not lend itself to assessing the risk in a one-size-fits-all way once a year during insurance renewals. Yet this defines the standard market approach to assessing the risk.

When taking a step back, it’s obvious that a different approach to understanding and assessing technology risk is needed. But just articulating the need for a different approach isn’t enough for risk and security teams facing down determined adversaries and potential loss events.

What matters is creating new products that address risk/insurance director and security team pain points to deliver better outcomes. And that is one reason we invested in putting the CyFi™ Platform in the hands of these teams.